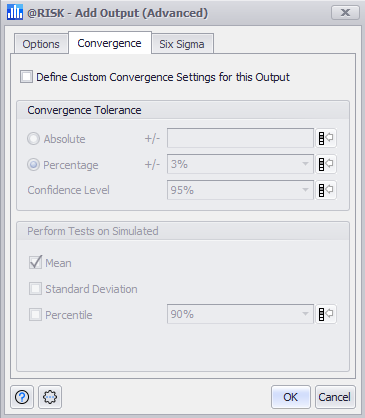

Convergence Settings

Figure 1 - Output Convergence Settings

The convergence of an Output is a measure of how the statistics (e.g. Mean) for that Output Distribution change as additional iterations are run during a simulation.

Convergence monitoring for a simulation works together with the number of iterations run; when using monitoring, it is best practice to set the number of iterations for the simulation to 'Automatic'.

Convergence testing is turned off by default, and can be set for the entire Simulation; see the Convergence Settings page under the Simulate section for more information. However, distributions can be configured individually to use their own Convergence settings in the Convergence tab of the Add Output (Advanced) window (Figure 1, right).

When a distribution is configured to use its own convergence, the @RISK property function RiskConvergence is added to the function as an optional argument.

The maximum number of iterations that will run when the Simulation is set to 'Automatic' is 50,000 iterations.

Convergence Tolerance

Tolerance settings specify the thresholds for the Output being monitored; for example, in Figure 1, above, the Output is configured so that iterations will continue to be run until there is a 95% chance (a Confidence Level of 95%) that the mean of the Output falls within 3% of its true value. These settings will monitor the iteration outcomes for the statistic selected in the Perform Tests on Simulated section; see below for more information.

Perform Tests on Simulated

The statistic selected in this section is the statistic that will be used to monitor convergence.