Convergence Settings

Convergence, in the context of @RISK, is the process by which statistics for output distributions become more stable as more iterations are run. Monitoring convergence can increase the efficiency of a simulation because after a number of iterations, the output statistics will not change significantly meaning additional iterations are essentially wasted. The Convergence tab enables configurations for if, and how, @RISK monitors convergence and at what threshold the output statistics' convergence is deemed acceptable.

Please note: monitoring convergence can have an impact on the runtime of a simulation. If the number of iterations to be executed is known and the fastest simulation is needed, it is recommended that convergence monitoring is turned off.

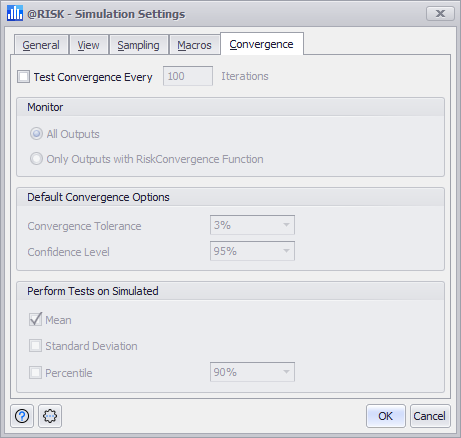

Figure 1 - Simulation Settings Window - Convergence Tab

The Convergence tab of the Simulation Settings window (Figure 1, right) contains three options groups:

To enable Convergence options, check the 'Test Convergence Every' box at the top of the window and set the frequency at which output statistics will be tested for convergence.

Monitor Options

This section determines which output distributions will be monitored for convergence.

Default Convergence Options

These options determine the thresholds for the convergence tolerance and the confidence level for an output's monitored statistics.

Perform Tests on Simulated

These options determine which simulated statistics will be monitored for the output distributions contributing to convergence.

To add a statistic to convergence monitoring, check the box next to its name. If 'Percentile' is chosen, set the percentile that should be monitored.